24 KiB

+++ title = "Decentralized forge: distributing the digital means of production" date = 2020-02-26 +++

In the world of today, more and more software gets built. But increasingly, the tools used to produce software are falling into the hands of a few giant corporations. Just last year, Github got acquired by Microsoft for more than 7 BILLION (milliards) dollars. In this article, we'll explore what's wrong with Github, discuss alternatives that popped up in the past decade, and see what kind of decentralized cooperation the future holds.

In this article, I will argue federated forge is cool. Or decentralized auth. or p2p stuff but we need to do something

Github considered harmful

For those unaware, Github is an online forge. That is, a place where ordinary people and professionals alike sign up to cooperate on projects. While the more complicated aspects of code collaboration are handled by the git versioning control system, Github brings a user-friendly web interface where you can browse/file bugs (called issues) and submit or comment patches (pull requests).

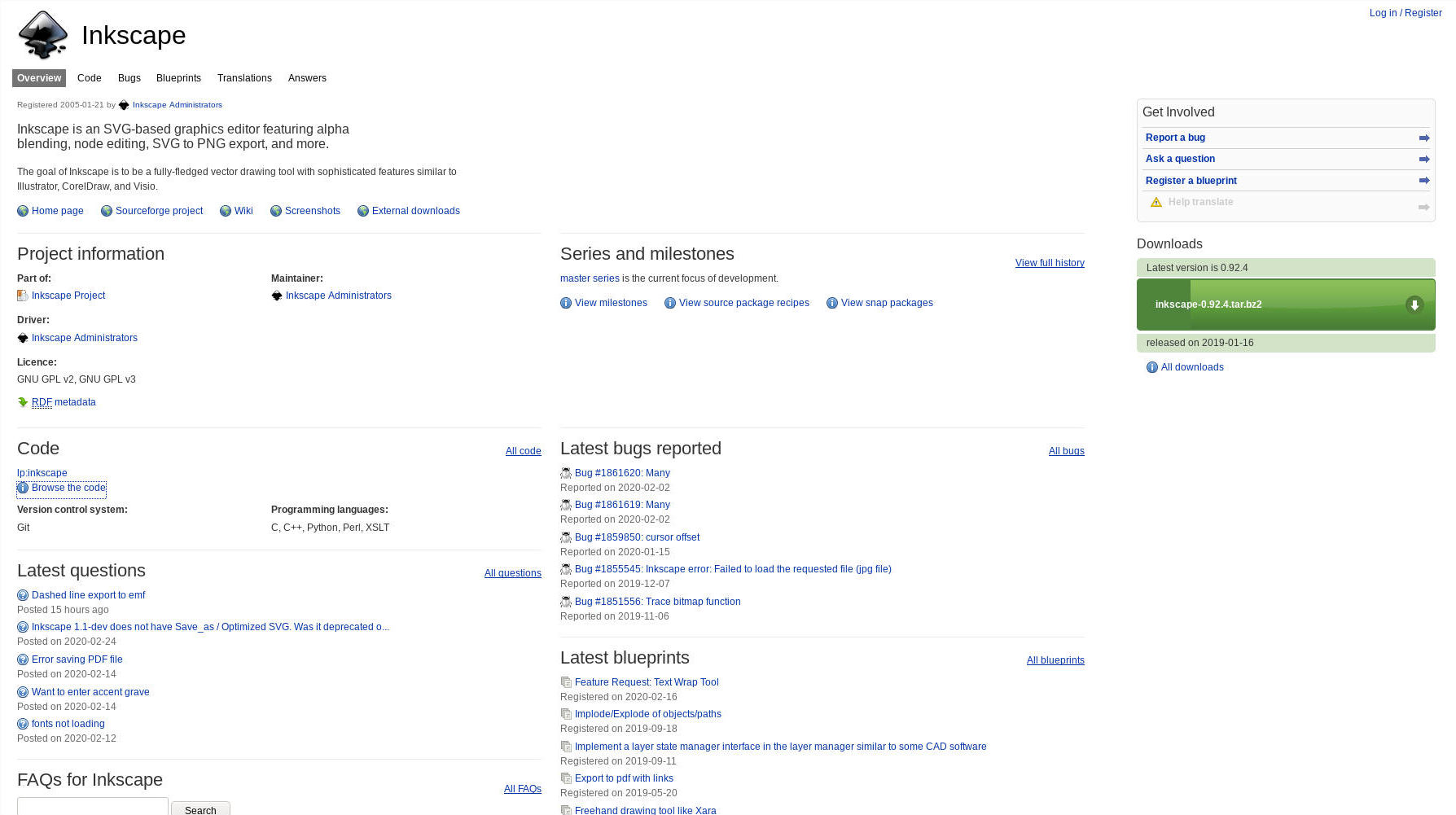

Github looked really nice, because it was so much less frightening to newcomers than previous web forges such as Sourceforge or Launchpad. But of course, the startup developing Github did not intend to help humanity, and instead captured a whole generation of developers into a walled garden, by making it impossible to participate in software development when you don't have a Github account.

By building such a popular centralized forge, they created a strange situation in which most public software development takes place on Github, with a single company that can decide the fate of your project:

- in October 2019, Github removed a militant application in Catalonia, on orders by the Spanish government which has been busy crushing opposition

- in July 2019, Github banned all accounts who had used their services from Iran-attributed IP addresses (TODO: see how IP subnets work and why banning per country is stupid)

- a few years before, Popcorn Time repositories have been taken down

These painful developer stories with Github are unfortunately far from isolated cases, even though just a tiny portion of Github's wrongdoings attracts media coverage. But to understand how we've reached this situation, we need to understand what made the success of Github in the first place.

The point of Github

Even before Github even existed, free software forges were already widespread. GNU Savane (forked from Sourceforge), Launchpad and Fossil SCM are notable names in the forge ecosystem.

However, it is often argued their User Interface is not very user-friendly. Many new developers would be surprised that, although README files have been hanging around for a long time, most forges of the time did not display the README content on the project homepage, but would rather display a list of latest commits.

For example, a project's homepage on Launchpad takes me one click to get to the project's files, and an additional click to see the README.

Arguably, their UI were centered around technical notions (imported from the mathematics lying underneath) such as branches and refs, catering to an already-familiar crowd, while newcomers were left confused.

Github's new features were not attractive to well-established projects who had the time to develop their own solutions and were rightfully concerned about Github's mischiefs. But to many smaller projects, Github's features were crucial to get up to speed and produce quality software: continuous integration/delivery (CI/CD), integrated tasks board (kanban), 3rd party tooling for code coverage and testing.

Nothing Github did was new. But surely, Github was breaking away from the Keep It Simple Stupid approach and building an integrated solution where everything you need for software development is within your reach. This was an appeal to many people.

Selfhostable centralized forges

With Github's growing powers and mischiefs, we've seen in the last decade a few projects rising up to provide the same kind of experience, but in a selfhosted environment. There's quite a bunch of those, but in this article I'll focus on Gitlab and Gitea.

Gitlab

Gitlab started about a decade ago as a free-software alternative to Github. According to their history on Wikipedia, they started adopting an « open-core » in 2014. This means they now release a free-software Community Edition which has fewer features than the Enterprise Edition.

While Gitlab is not the worst business in the field, it's important to remember they are a profit-driven organization with a track record of bad decisions:

- renting "cloud" servers from Google (used to be Microsoft), so giving money to Gitlab means you give money to Google

- forbiding political discussions between workers in regards to serving unethical clients (decision repelled due to uproar)

- including invasive user tracking on gitlab.com (decision repelled due to uproar)

- despite aggressive pink-washing, asking women to wear "short but somewhat formal dress and heels" then trying to cover up the story by marking forum posts addressing the issue as confidential

On the more technical side of things:

- Gitlab works very poorly without Javascript in the browser: even README files cannot be displayed with Noscript or a Tor Browser

- Gitlab is very resource-hungry, recommending 4GB of RAM for hosting an instance

- Gitlab feels very slow to use, even self-hosted on a local network

- Gitlab is notorious in the selfhosting community for painful upgrades

Gitea

Gitea is a community fork of Gogs started in 2016. It should be praised that a maintainer refuses to « Move fast and break things ». However, i'm glad the project was forked to promote community organizing. Over the past years, Gitea has implemented a lot of new features. Some of those have found their way upstream into the gogs codebase, albeit with sometimes different implementations. This way, everybody wins!

Gitea currently powers the tildeverse.org forge, and every time I've used it so far has been a pleasure. However, Gitea is not as featureful as Gitlab, as explained on their website in a detailed comparison. For example, it does not support continuous integration/delivery pipelines out-of-the-box.

The "many accounts" problem

Over the past decade, Gogs/Gitea and Gitlab have contributed a great deal to the free software ecosystem. Adopting a modern forge has allowed projects such as Debian and Gnome to build more reliable software more quickly. But to developers contributing to several projects, a new problem has emerged.

Part of the user-friendliness of the Github experience is the ability to use a single account to contribute to any project... or at least any project hosted on this giant walled garden. Both Gitlab and Gitea have been designed with this centralized mindset: you need an account on every server where you want to contribute something, whether it's reporting a bug or submiting a merge request.

This approach is not a problem for a huge corporation that seeks to entrap users in its ecosystem (it's actually a feature), but is a massive pain for end-users who want to report issues. Because many projects now selfhost their own forge, you may need to create separate accounts on 0xacab.org (Riseup forge), framagit.org (very popular in France) and tildegit.org (the tildeverse forge).

As a result, we end up with a collection of very small walled gardens. Their cultures are thriving, but what's the point in all this if we can't cooperate across gardens? As an end-user, I would like to be able to contribute to any public project from a single identity, without having to deal with « many accounts ». And as we'll see, there's more than one way to tackle the problem.

Git is already decentralized

As we've stated before, a forge is a collection of tools to ease cooperation on a project. A forge itself is built on top of one or more Version Control System (VCS). By far, the most popular VCS today is git. But mercurial is still pretty popular, and darcs is still maintained and is even having a modern reimplementation in Rust (pijul).

More specifically, these VCS just mentioned are Distributed Version Control Systems (DVCS). This means they can be used without a central server, contrary to previous systems. If you're not familiar with the differences between client/server VCS and decentralized VCS, I'd recommend reading the Wikipedia page on Decentralized version control.

The key point is that in a DVCS every person has a complete copy of the project, so that they can apply proposed changes to their copy of the repo. Historically, git is meant to be used with email to send and review patches. That's how Linux kernel development works, and such distributed workflows for email patches are documented in the official git book.

An article by Drew Devault, a maintainer of the Sourcehut forge, got a lot of attention lately to advertise existing federated cooperation workflows built on email. In the article, he rightfully argues we should make a better job of teaching such workflows to newcomers, and integrate them with smoother tooling.

git email is not enough

But the point missed by the "git+email" clique in my view, is that different tools cater to different audiences. An email-based workflow isn't tedious to terminal-friendly developers, but it considerably raises the bar for people of different backgrounds to contribute to projects. These contributions can be simple bug reports, but let's not forget that forges are used to manage more than code: documentation, websites, translations (among others).

Versioning tools have long been used by designers and writers and many other folks, who back in the day favored mercurial and darcs for their user-friendliness. In comparison, the latest newcomer git was invented by and for kernel people to streamline very complex workflows, but is notoriously complex to use for newcomers even for very simple tasks such as keeping up-to-date different branches with minor, non-conflicting changes (TODO: ensure that's true and investigate other major git painpoints).

After years of using git, I still sometimes have to read docs and tutorials to achieve what I consider basic functionality. Sometimes I end up force-pushing commits because it enables me to reach my goal-state in seconds instead of minutes, even though I'm convinced being able to rewrite history is the worst kind of anti-feature for a VCS. I'm far from an isolated case and it's become a meme in many workplaces and non-profit communities that you often have to spend more time making git happy than working on your actual patches.

TODO: insert mandatory tar/git MEME (xkcd or commitstrip)

Github and other forges have succeeded in making git workflows transparent and usable by people who do not have the time or knowledge to script their mailbox and connect many smaller tools together. For example, to a person reporting a bug, a forge's issue tracker makes it easier to see if this bug has already been reported, and maybe even patched. This is something that can and should be addressed in email-based workflows by piping more parts together, like the Debian project does with its bugtracker. (TODO: link)

But at some point, we can acknowledge that modern centralized forges have brought something on the table, and we don't currently replicate such a smooth experience easily with decentralized workflows. In the rest of this post, I'll outline what it would take to achieve a user-friendly experience with a decentralized forge, and take a look at current strategies being implemented by various projects.

TODO: git vs mercurial link (mercurial not dead) ?? maybe not the right place and time but many can be mentioned

TODO: mention git email niceties https://git.causal.agency/imbox/about/git-fetch-email.1

What it takes to build a decentralized forge

As we've seen in the previous sections, a forge consists of higher-level abstractions and tools to ease cooperation through a version control system. While this is not an exhaustive list, we'd like a decentralized forge to provide:

- tickets: users can open issues and comment on them to report bugs and have debates

- merge requests: users can propose patches and maintainers can review them and merge them

- continuous integration/delivery: running code in an automated fashion every time changes have been submitted

While each of these features is different in its implementation, they all require some form of signaling mechanism (to advertise new changes) and some semantics (to describe those changes). The signaling can either take place within the git repository itself (in-band) or use another protocol (out-of-band).

With centralized forges, we use some out-of-band signaling with custom semantics. Github and Gitlab for instance provide HTTP APIs to this goal. In a decentralized setup, we need a mechanism to propagate the state of a repository throughout the network. This state can be based on a consensus of peers, on a gossip of peers, or can be derived from authoritative sources.

Source of trust

At some point in this article, we have to take a minute and talk about trust. Because everything we do on the Internet eventually boils down to trust and how we establish it. Zero-knowledge proofs are gaining traction and producing useful results, but large portions of our computing still rely on establishing a chain-of-trust with some 3rd parties, if only for routing to another machine (eg. BGP, TCP).

The same goes for a forge: starting with a repository ID we establish a chain of trust to retrieve the current state of the project. In both centralized and federated setups, we rely on location-based addressing, typically via the HTTP or SSH protocol. That means, we declare where we want to find the latest information about the project, and explicitely trust this provider (location) to serve us correct information.

This is precisely what we do when we git clone https://github.com/foo/bar:

- establish a chain of trust over the DNS protocol to determine what IP addresses we can reach

github.comwith - open a connection to one of those addresses, and trust our ISP's chain of trust to deliver packets to Github

- ask Github to provide us with the repository

foo/bar

However, distributed peer-to-peer protocols take a different approach and usually resort to content-based addressing. Instead of specifying where to find a specific piece of content, we describe what piece of content we want specifically. By performing calculations (hashing) on the content, we can determine a unique identifier (a hash) for the content.

This hash can then used as a secure identifier for the content. When we're trying to get this piece of content on a second machine, we can verify the content we get by calculating its own hash and comparing it to the hash of the content we're looking for. This is, on a high-level, how the Bittorrent and IPFS protocols work.

Semantics

TODO: explaining the semantics of a forge and difference between checkout based and patch based VCS

Storage

With proper semantics in mind, we can now determine how and where to store the issues and merge requests. Some projects attempt to store information directly into the versioned repository, by using well-known folders and sometimes a dedicated branch.

TODO: give names and examples

While these projects are very promising for decentralized information tracking by providing useful semantics over a VCS, I did not find one that tried to implement a specific merging strategy. While this is a feature for projects with an established workflow, it does not crack the decentralized forge problem.

Some other approaches described in the next section either rely on communicating with a forge server, or propagating the changes through global consensus (blockchain) or gossip.

Signaling

Back to: how to submit new issues and merge requests and let our peers know about them.

Federated system

In a centralized or federated setup, we use a separate, out-of-band protocol to communicate with our forge (client-to-server) and tell it to open an issue. As our forge is considered the central source of truth for the repository, it can then spread this information to our peers and we can call it a day.

But what now if I want to open an issue on your repository, on your server, where I don't have an account. Either your forge allows me to login and use the previously-mentioned client-to-server protocol to open an issue, or we need a federation protocol (server-to-server) so that I can create an issue on my forge, so that my forge can let yours know about it.

In both scenarios, my forge vouches for my identity. But the second approach has two clear upsides:

- having a federation protocol from the beginning gives us building blocks for backup/migration mechanisms across servers

- letting strangers login on your forge can lead to mayhem if permissions are misconfigured (maybe someone from another server can open a project on your forge??)

Peer-to-peer system

In a peer-to-peer setup, things are different because there is not a central source of truth. So in order to propagate changes to the repository, we either need to convince everyone on the network to advertise our changes (global consensus), or we can advertise them ourselves and wait for trustful peers to propagate them (gossip).

The first approach is usually achieved through blockchains. While pursuing a global consensus is a noble task, it's a famously hard problem to crack! Bitcoin, for instance, wastes countless computing resources in order to achieve consensus through a process called Proof of Work (TODO: link). While consuming more electricity than many small countries, the Bitcoin network is still famous for its slow throughput (processing transactions is either very costly or very slow) and can be abused by Sybil-type attacks (TODO: link).

Also, in order to reach global consensus within the blockchain, each active node usually needs to know the whole network's history of transactions. Verifying each transaction that ever occurred on the network requires a lot of computing power, and a lot of storage space. Therefore, as the network grows, more and more user devices become incapable of active participation in the network, and the power gets concentrated into the hands of the most powerful (TODO: maybe give example bitcoin? or maybe too much information?)

Of course, some mitigations can be put in place, and not all consensus-reaching systems are as defective-by-design as Bitcoin. However, until some such technology proves capable of high throughput while upholding decentralization, so-called blockchains appear to be a dead end filled with corporate snake-oil.

A notable exception is the radicle project wants to build a reward mecanism for miners on a proof-of-work blockchain. This is surprising because the radicle project seems to be driven by enthusiasts and not a greedy corporation.

NOW EXPLAIN GOSSIP STUFF

There was a discussion on radicle forums about using a gossip protocol instead of a blockchain, but this discussion has not reached a conclusion (yet).

DHT is a very good balance. It does local consensus (not local geographically but local as in people of the same interest) to share pieces of global state by gossip global consensus is usually an anti-pattern, as outlined in naming systems (see reasonings behind DNS delegation and GNS petnames) also mention SSB?

Access control / spam prevention

ok now we can send message now we want to make sure we don't receive too many of course rate limiting applies

in a p2p fashion, i don't know apart from web of trust in a federated fashion, we can do opt-in federation or something like that : TOFU like if one issue/merge request is accepted from a server, then that server is whitelisted for federation with ours.. or even more complex reputation/karma systems

A look at some solutions

some solutions:

- Artemis: https://www.mrzv.org/software/artemis/ integrated into mercurial (hg subcommand) with partial git support

- bugseverywhere: https://github.com/kalkin/be VCS-agnostic

- git-dit: https://github.com/neithernut/git-dit git specific

- ditz: https://github.com/jashmenn/ditz VCS-agnostic

- ticgit: https://github.com/jeffWelling/ticgit

- web interface + git specific

- git-bug: https://github.com/MichaelMure/git-bug git-specific

- git-ssb: https://git.scuttlebot.io/%25n92DiQh7ietE%2BR%2BX%2FI403LQoyf2DtR3WQfCkDKlheQU%3D.sha256 git-specific but very interesting anyway because it could work with others and does out of band stuff

- git-issue: https://github.com/dspinellis/git-issue git-specific (bridges to Github/Gitlab)

- sit: https://github.com/sit-fyi/issue-tracking SVN agnostic

in the same family:

- git-appraise: https://github.com/google/git-appraise

- git-notes: https://git-scm.com/docs/git-notes to investigate?

TODO: why have i not mentioned fossil SCM? i need to dig into this again but the docs are so dense it's hard to have a global overview of how it operates in a decentralized manner

TODO: nice-to-haves from radicle: signed commits and maintainership identity bootstrapping through crypto

Conclusion

many projects we just reviewed are far from ready for decentralized forging. they're still doing a very good job at pushing the ecosystem to selfhosted, free software solutions that empower us and not our corporate masterlords. this needs to be praised

the intent of this article is not to criticize or anger people because their favorite forge is the best in the universe, i just wanted to take a look at the ecosystem, see the reasons that brought us here and take some reflection on how we go on from here and bridge the gaps

i don't think there's a good and a bad way to do this (although there are many bad ways to do this), but we need both federated and p2p solutions to these problems and maybe we need to break the false federated/p2p binary: they serve different purposes but they mix very well together and i don't see why a federated forge could not cooperate with a p2p forge

there might be some information loss on the way (for example unsigned commits from Gitlab may not be imported with proper signature for radicle although some trusted radicle nodes (the maintainers) could sign those commits in good faith

Decentralized VCS and decentralized forge

git is already decentralized VCS we just add some layer on top (federation semantics with ActivityPub (forgefed) or XMPP) or we encourage out-of-band decentralized interactions (like sourcehut does with email-first workflow) or we take the problem inband (git-issue) but that does not solve everything! or we do like https://radicle.xyz/ a bit of all this on top of a p2p network

what it takes to build a decentralized forge:

- either i can open issues/merge and receive notifications from any instance (federated forge) ; this can of course be turned off for some internal projects that should be private

- or we consider instances and web gateways to be mere components that should not play any role more than displaying information